Kaggle Competition #melbdarathon2017

This will be more of a play-by-play of highlights on things like Community, Research, and talking about basics of data science from example of what I did, not how or academically why 👨🎓.

It all started with a casual conversation that led me to the Data Science Melbourne #Datathon kickoff night Thursday last week.

Kickoff night

First thing was lining up with my laptop to copy some data.

Busy yes, but also very well organised.

Once at the table the copy was quick and I was off with a few quick cat and head greps.

The data

Until that moment I wasn't aware of the type of data we were getting. I learned that it was medical industry related, which is both scary because I've never had any work in this sector, and exciting for the same reason.

The event attendants all signed NDAs so other than what has been publicly made available I can't go into details.

Community

I am huge on collaboration and community, I think it's my inner mentor taking over my professional self at times. Walking around the room looking for some indication of how to communicate during the event 👀, asked a few people, no one was aware.

So I met the organiser Phil Brierley. He arranged a forum (how quaint) so I offered Slack. Phil is a Slack user so was pretty keen for someone to take lead on that for the event. Happy to oblige 😀

At the end of last year (2016) around November I set up Melbourne Developer Network mainly to satisfy the need to combine and communicate with developers from all over the world daily while exposing them to developers from Melbourne, an awesome tech community.

Predict the onset of diabetes

On my way home I made myself familiar with the Kaggle comp

So I ask my close buddy "Ok Google, How to win a Kaggle comp?";

Blog post on: how to win a kaggle competition

Goog knows everything 🔍 💡

So I noticed something interesting

It used to be random forest that was the big winner, but over the last six months a new algorithm called XGboost has cropped up, and it’s winning practically every competition in the structured data category.

Now I'm a bit of a Logistic Regression go-to kind of guy, and known to dabble in decision tree to better understand my data (well more of the overfitted regression tree is what you'll see unfortunately, but i try). So I was quite keen to learn something new for this competition

What is XGBoost

So what is this magical silver bullet XGBoost? It's clearly an implementation of boosted tree models by its naming, this is what i found;

A Gentle Introduction to XGBoost for Applied Machine Learning which was concise though lacked specifics, so this post on Gradient Boosting with XGBoost was perfect for my brain to consume.

My first Kaggle competition

I digress, so back to the comp, I was after a bit more of an introduction as a Kaggle beginner, rather than a winning plan.

This post was actually quite helpful;

Titanic made easy - predict survival Competition

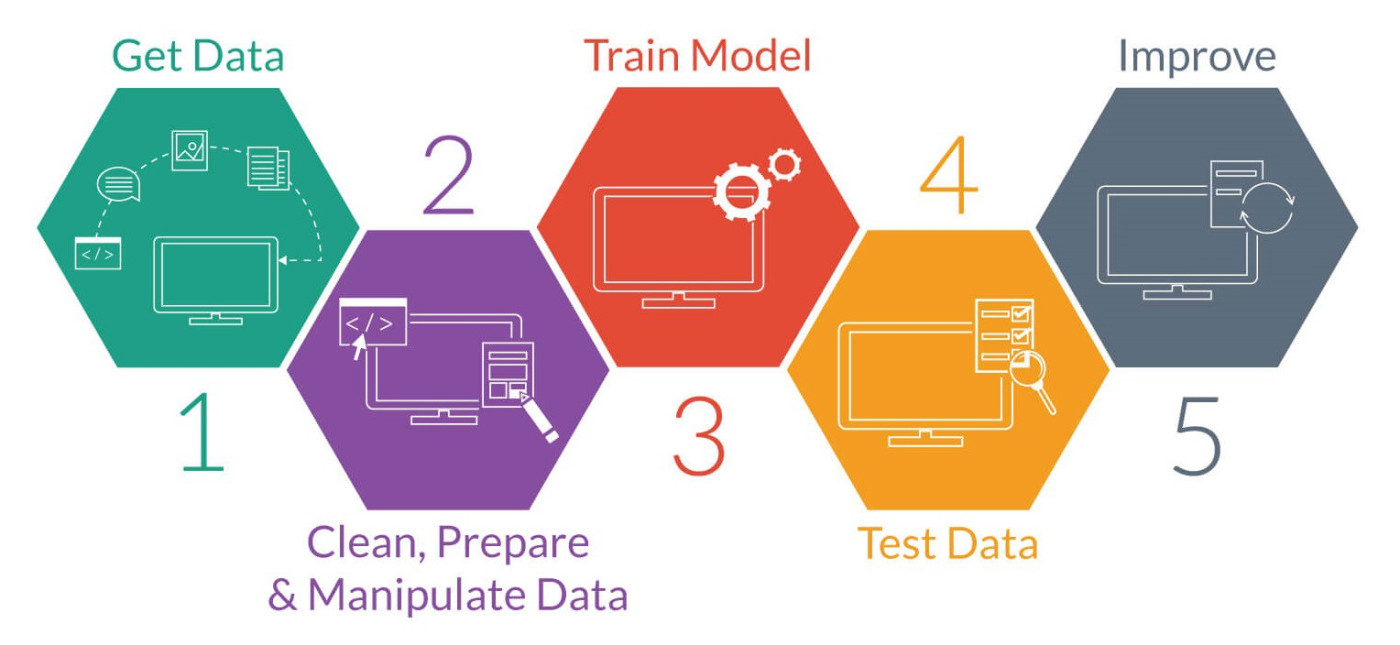

It introduced the DSS (Data Science Studio) tool which I've been exposed to before but is a great example that is full of really useful tips not just for a first time Kaggler but also as an introductory to the general topics and concepts of ML that you might like as a launch pad to go research specific things.

One thing that you really must do first before you can attempt to do any type os ML is calculate a baseline, it is really important and isn't talked about a great deal. You'll notice that DSS gives you different performance indicators of algorithms but you need the baseline to comprehend this at all.

The first thing I needed to achieve was the baseline, an essential element to any machine learning endeavour. How does one calculate a baseline? What is a baseline?

Goog can be your friend too 🙃

Honestly, you will find a well written explanation and guide in googles top results, far better then I would be able to describe here.

Reading the data

All of the data was provided in csv, obviously that was never going to be workable, and we only had a small sample of data 740MB compressed, so we're not likely to be diving into big data territory here, Python 🐍 to the rescue.

I loaded the csv into a Spark DataFrame and then converted that to a Pandas DataFrame for querying, my colleague introduced Rodeo IDE to me recently so it seems this is a great project to give that a try.

So, how much data do we have? Row count just under 60 million rows. Nice.

python

total=0

for file in os.listdir(path):

df = pd.read_table(path+'/'+file)

count = len(df)

print(file+' '+str(count))

total+=count

print(total) // 59,450,785

The next step is to split the data into sets that will be used for model testing and training. There are a few schools of thought on this topic and in academia k-fold is overly hyped to the point it has become somewhat of a buzzword, literally a random RMIT student threw it into a conversation where we were discussing the csv file while I was grepping to see what we had "have you tried k-fold to test the files?", Huh!?.. I didn't bother clarifying, I just tried to be as casual as possible directing that question about k-fold to their professor or teacher.

In any case, while we are on that topic, I'll be using more of a "holdout" approach and am more comfortable doing my cross validation in this manner for this topic specifically. The one thing we should do before we even get to that is understand the portions of our data and what they are used for.

Test versus Train

You have the target feature you are going be predicting with your model, The train dataset is a portion that have already been scored, the actual results are known and included in the dataset. Your test dataset is what you test the results of your model with to make the prediction, we still have known results but this test set of data will have all of the same features as the train set.

Being a Kaggle competition there will be a withheld portion of data as well, so the test set should be identified and split for your submissions. So we will end up with 1 train set and 2 test sets.

I spent several hours splitting out the data into HDFS files from the Pandas DataFrames to achieve this.

Just to be clear now we know how much data we have and how we will be using it, even though I'll be storing dataset samples as HDFS, I will not be using Hadoop

Don't use Hadoop - your data isn't that big

Granted we have 60 million rows of data, maxing out the effectiveness of SQL almost immediately (not entirely true), don't think that just because it is hard in an SQL database that you have big data and you must resort to solutions like Hadoop, it's a myth, an ill-conceived concept, the choice for the less educated; and has a simple remedy called Pandas.

The HDFS format is simple enough for Pandas to deal with so it's a pure convenience for me.

Datathon insights competition

Out of the 2 competitions I am far better equipped with my experience to do well on the insights portion, so I've spent a lot of time this week exploring the data in different ways.

The folks from Yellowfin sponsored the datathon and were kind enough to give us free licenses to use their platform. They have several data source connectors available but none that are possible with the HDFS files i've curated but MySQL was one that I was happy to try.

Using Docker

Before doing anything you need an environment to work in. Because my computer is packed with all sorts of project I contribute to, open sourced, and the ones for my full time employment. I generally use Docker now (previously i was big on Vagrant) so here is a compose file i put together for this event;

And the python container

Using MySQL

So I'm trying out Yellpwfin and one of their datasources is MySQL. Initially i was just using Pandas but that isn't as expressive as SQL for quick queries, so my go-to SQL database is usually MySQL for several reasons;

- It's free with good documentation, loads of users, and a great community for answers if you need.

- MyISAM is exceptionally fast for searching

- You can partition data in almost any way you can imagine

- It has a powerful and mature cli

- There are solid and mature SDKs for practically any programming language

After a quick LOAD DATA INFILE sweep to get data in and running a few views of that data in Yellowfin I quickly ascertained that I'm not going to be very productive using MySQL regardless of how Yellowfin worked (which seemed to be quite well presented with a lot of neat visualisations built in such as graphs and scatter maps which was pretty cool).

So although I can do some fast queries across the entire partitioned dataset with well thought-out filters, doing any sort of aggregation and grouping is really pushing the databases limits.

Using Elasticsearch

Bash is great for fetching and managing data as strings. Due to how much I use bash for automation and tooling it is my preferred scripting language, and although it is very powerful at string manipulation it isn't as human friendly for viewing or visualising said string results, but Elasticsearch is thanks to JSON, and I can still script everything in Bash.

So Elasticsearch is generally a great tool to get some quick insight with expressive queries by just using curl, it has proven to me on some massive projects in the past to be far superior than and traditional database engine, here is some quick bash to get data from mysql

#!/usr/bin/env bash

user=<u>

password=<pw>

host=mysql

es_host=es:9200

es_index=melbdatathon2017

es_type=transactions

max=279201

start=0

end=25

step=25

document=1

echo "[" > ./errors.json

while [ $start -lt $max ]

do

end=$(( end > max ? max : end ))

mysql melbdatathon2017 -h$host -u$user -p$password -B -N -s <<<"

SELECT

cols

FROM

tables

LIMIT start, step;" | while read -r line

do

someCol=`echo "$line" | cut -f1 | tr '[:upper:]' '[:lower:]'`

json="{/* stuff here */}"

json=`echo "${json}" | tr -d '\r\n' | tr -s ' '`

es_response=`curl -s -XPOST "${es_host}/${es_index}/${es_type}/?pretty" -H 'Content-Type: application/json' -d "${json}"`

failed=`echo "${es_response}" | jq -r "._shards.failed"`

if [ "${failed}" -eq "1" ]; then

echo "${es_response}," >> ./errors.json

fi

start=`expr $end + 1`

end=`expr $end + $step`

document=`expr $document + 1`

done

echo "Stored: ${document}"

done

echo "]" >> ./errors.json

echo "Done: ${document}"

Data mining

For one of the visualisations I wanted to do, I needed the lon and lat to plot a scatter map, but all we had been provided were postcodes.

I put together this short bash script to enrich my dataset;

Once I had the geo data for each post code it was a simple but long running effort to script a cursor lookup by postcode and enrich my dataset.

Using Influxdb

Another useful way to store data for insights is by time series. Yes tools that read HDFS are good for this task, MySQL and Elasticsearch are also very capable as well, but Influxdb is designed to achieve this specific use case.

I am very big on choosing the right tool for the job.

It was a pretty trivial task to modify the script I wrote that exports from MySQL and imports to Elasticsearch to instead achieve some inserts into Influx, and once I had the data it became clear in the data I was seeing that my goals required further research.

Research

One of the insights experiments I conducted involved cost over time, I wont go into specifics about what kind of cost we are talking about in terms of data but I can describe that it involved understanding the vague parameters of the Pricing Pharmaceutical Benefits Scheme. My conclusion here is that there was a big black hole of information around what is classified as "the cost to the pharmacist" for which tears apart my goals.

Hack day 1

Unable to attend in person I made myself available over Slack and helped out a few competitors throughout day on problems in their environment or understanding the data. This was only possible due to the awesome exposure of my Slack team;

I was sharing scripts and running some basic graph visualisations across the entire data, this was fun and insightful to be involved in different ways of thinkings.

I didn't have much time invested to conduct my own work but it was well worth it to speak and meet some great people.

Next steps

I still need a great isights idea, i've worked on many small pieces of insights but I'm looking for one that will have profound benefits to someone. So I spoke to a friend whom is a Diabetes sufferer for some first hand experience and he has been a great source of inspiration.

The insight competition doesn't actually need to be related to Diabetes, only the Kaggle comp is, so I remain open-minded to the possibilities.

Member discussion