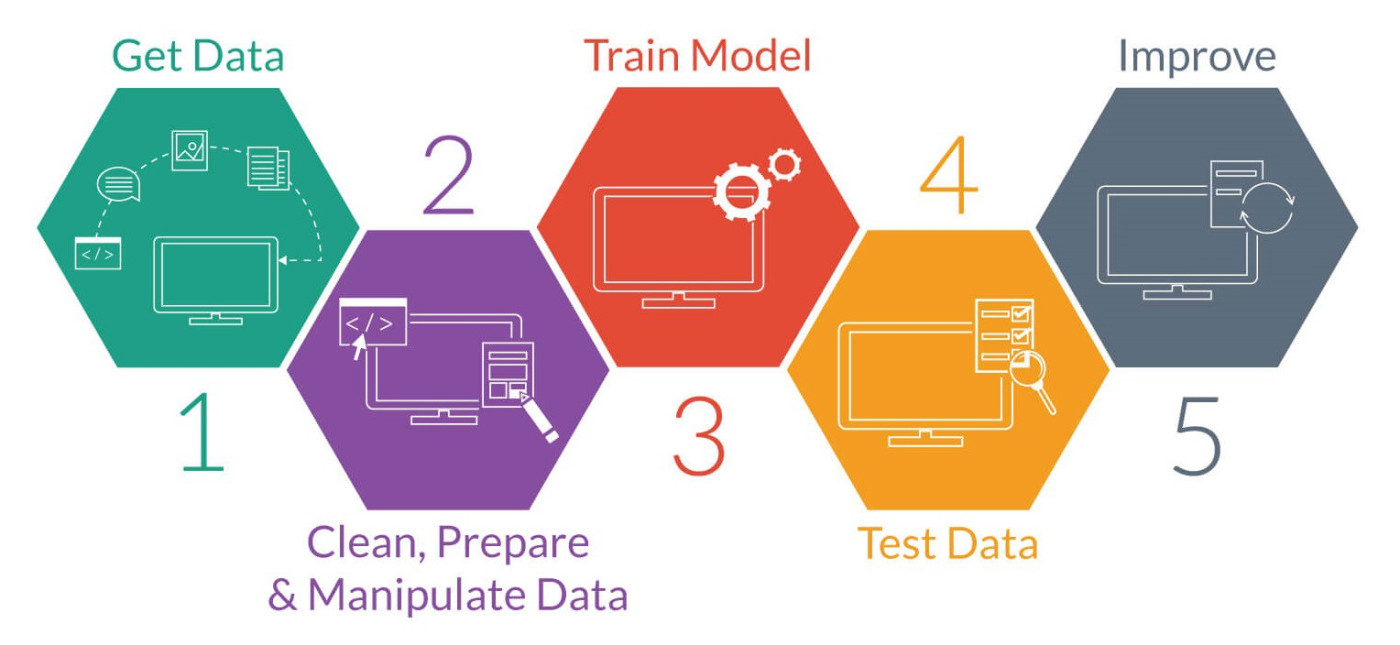

Machine Learning model training over time

Do you train new models using new data, or do you re-train existing models with new samples?

Retraining a model

For the past few years I've been capturing network traffic and building various machine learning models around the data, not always security related but always design for a long lasting purpose.

This means I have many different models, using sometimes the same features, all off of the same full data set. Retraining models on this exponentially growing data became unmanagable last year, so i took a new approach.

Versioning Models

Taking inspiration from my software engineering background I already employ a strict versioning strategy so that I can reason with changes in my data and how models evolve over months and years.

Multiple Partitioned Models

Distinct from versioning, you should consider using multiple models that are trained on partitions of data. Each partition of data uses identical models and may also be of identical schema, but the benefit of using multiple partitioned models in Machine Learning is;

Economics

The economics of training models is a result of the inherent economics of the cloud infrastructure and time needed to train even a shallow decision tree on petabytes of data. So this is to say that by splitting out your data into partitions and training a model on each partition that is independent of the feature chosen for the partition, you can for example train a model on Auctions that ended in the past decade, deploy that model separately from the current decade that is currently being re-trained as new data arrives.

By separating models into partitions we are confident of the static model and this confidence is what we commonly call a Bayesian or probabilistic model, because we can make an probabilistic inference.

At first glance you may think I am describing in simplistic terms cascaded classification or regression, these are distinct in that partitioning your models is a technique not an algorithm. Many times when explaining this technique some organizations I've worked with immediately jump to describe their multi-stage classification or regression models because these are the algorithms they have memorized, but these partitioned Models are not belonging to any specific category, you can partition your models for regression or not.

Applications for Partitioning

Information Security has many vendors that market features such as behavior analysis using machine learning, and in many cases will not discuss their models, what samples are used for training, how much, or the algorithms they use - because;

Proprietary!

On occasion I have been able to speak to vendors at a conference that were willing to discuss maybe one or two of these points with me, I like to ask;

How are threats evaluated over time and represented in the models

What I learned was troubling to me. I commonly receive a response that is a variation of some cyber threat scoring and model retraining mixture of replies. If we have learned anything from GRC is that a threat score is not a risk assessment, more then that is that in data science we know that by changing context any assessment today could be either redundant or critical to the accuracy of a model.

Given the model is designed to provide probabilistic inference based on samples over time, the confidence score if the model was trained today will be far different then if the model was trained a decade ago on data at the time. However, inversely if the model is designed to infer point-in-time you would require that many models are trained and deployed and never retrained to retain that point-in-time accuracy when used as an input (or the inference as an engineered value) to your regression model.

Forking a model

Another great example in the real world is that threats are patched, and attackers move on to new vulnerabilities. So a critical and high likelihood threat today may because moderate and unlikely in future as defense-in-depth is deployed and attackers stop using the patched vulnerability. This scenario requires retraining certain models to account for these changes over time. In this case a fork of a model may be required so as to retain accuracy of the point-in-time and real-time inference requirements.

Conclusion

Machine Learning model training over time is not complex but it is domain specific and should be driven by purpose rather than opinionated or classical views taught in the past.

Try to look at the problem at hand, think of what you need to know now for your current point-in-time and real-time null hypothesis, but also try to imagine the future point-in-time and real-time needs as these are never going to be identical to your current now null hypothesis.

Then use not one, but all of these as variations of the original intent when designing a solution.

Member discussion