CISSP Study Material

As I am currently studying to sit the CISSP exam in 2018 and because I've taken over 25,000 words in notes so far I thought I'd share what I have so that others might be able to study a bit easier.

The relevant CISSP material is difficult to search for mainly I believe is due to the exam changes often or as people pass they tend to only pass on notes to friends, family, or colleagues.

I've done my best to group relevant notes together in a coherent way to follow if you're just starting out, so enjoy this brain numbing content, and don't forget to share the content if you found it useful so others may also benefit by finding it too.

Acronyms

GRC = Governance, Risk Management and Compliance

BIA = Business Impact Analysis

BCP = Business Continuity Plan

IDS = Intrusion detection system

IPS = Intrusion prevention system

SIEM = Security information and event management

DAC = Discretionary Access Control

DRP = Disaster Recovery Plan

RPO = Recovery Point Objective

RTO = Recovery Time Objective

MTD = Max Tolerable Downtime

MOE = Measures of Effectiveness

IPS = Voice Intrusion Prevention System

Fundamental Principles of Security

- Availability - Reliable and timely access to data and resources is provided to

authorized individuals - Integrity - Accuracy and reliability of the information and systems are provided

and any unauthorized modification is prevented - Confidentiality - Necessary level of secrecy is enforced and unauthorized

disclosure is prevented

Security Definitions

- Threat agent - Entity that can exploit a vulnerability, something (individual,

machine, software, etc) that can exploit vulnerabilities - Threat - The danger of a threat agent exploiting a vulnerability

- Vulnerability - Weakness or a lack of countermeasure, something a threat agent

can act upon, a hole that can be exploited - Risk - The probability of a threat agent exploiting a vulnerability and the

associated impact, threat plus the impact (asset - something important

to business) - Exposure - Presence of a vulnerability, which exposes the organization

to a threat, possibility of happening - Control - Safeguard that is put in place to reduce a risk, also

called a countermeasure

Security Framework

- act as reference points

- provide common language for communications

- allow us to share information and create relevancy

- ITIL

- COBIT

Enterprise frameworks

- TOGAF

- DoDAF

- MODAF

- SABSA

- COSO

ISO Standards

- ISO27000 - built on BS7799

Risk

- we have assets that threat agents may want to take advantage of

through vulnerabilities - identify assets and their importance

- understand risk in the context of the business

Risk Management

- have a risk management policy

- have a risk management team

- start by doing risk assessment

Risk Assessment

- identifying vulnerabilities our assets face

- create risk profile

- identify as much as possible

- cannot find them all

- must continually perform assessment

- Four main goals

- identify assets and their value to the organization

- identify vulnerabilities and threats

- quantify or measure the business impact

- balance economically the application of a countermeasure against the cost of the countermeasure, develop cost analysis

- has to be supported by the upper management

- risk analysis team

- made up of specialists

- risk management specialists

- change management specialists

- IT knowledge specialists

NIST

FRAP

- Facilitated Risk Analysis Process

Octave

The Operationally Critical Threat, Asset, and Vulnerability Evaluation (OCTAVE) approach defines a risk-based strategic assessment and planning technique for security. OCTAVE is a self-directed approach, meaning that people from an organization assume responsibility for setting the organization's security strategy.

ISO27005

The ISO27k standards are deliberately risk-aligned, meaning that organizations are encouraged to assess the security risks to their information (called “information security risks” in the standards, but in reality they are simply information risks) as a prelude to treating them in various ways. Dealing with the highest risks first makes sense from the practical implementation and management perspectives.

Risk Analysis Approaches

- Quantitative risk analysis

- hard measures, numbers, dollar value, fact based

- Qualitative risk analysis

- soft measure, not easily defined, opinion based

Quantitative Risk Measurement (Number Based)

- SLE Single Loss Expectancy

- ARO Annualized Rate of Occurrence

- ALE Annualized Loss Expectancy (SLE x ARO)

For example: purchasing a firewall. What is its purpose and capabilities on average, three times a year there is a breach, and data is compromised liability of restoring data in the end the cost of incident is $5000 this would be the single loss expectancy occurs three times, annual occurrence $15000 - 5000 * 3 = 15,000 this is ALE is the cost to mitigate greater or less than ALE.

Quantitative Assessment

- systems

- training

- vulnerabilities

Qualitative Risk

- not so much hard numbers

- should be contextual to business policies and compliance requirements

Qualitative risk analysis is a project management technique concerned with discovering the probability of a risk event occurring and the impact the risk will have if it does occur. All risks have both probability and impact.

Very High

Requires the prompt attention of management. The stakeholder (executive or operational owner) must undertake detailed research, identify risk reduction options and prepare a detailed risk management plan. Reporting these exposures and mitigations through relevant forums is essential. Monitoring the risk mitigation strategies is essential.

High

High inherent risk requires the attention of the relevant manager so that appropriate controls can be set in place. The Risk & Reputation Committee monitor the implementation of key enterprise risk controls.

High residual risk is to be monitored to ensure the associated controls are working. Detailed research to identify additional risk reduction options and preparation of a detailed risk management plan is required.

Reporting these exposures and mitigations through relevant forums is required. Monitoring the risk mitigation strategies is required

Medium

This is the threshold that delineates the higher-level risks from those of less concern. After considering the nature of the likelihood and consequence values in relation to whether the risk could increase in value, consider additional cost-effective mitigations to reduce the risk.

Responsibility would fall on the relevant manager and specific monitoring of response procedures would occur.

Low

Look to accept, monitor and report on the risk or manage it through the implementation/enhancement of procedures

Very Low

These risks would be not considered any further unless they contribute to a scenario that could pose a serious event, or they escalate in either likelihood and/or consequence

Likelihood

Find relevant definitions for each category within your business. An example of rare could just mean it could occur but only in exceptional circumstances and decades apart where almost certain risks are known to occur frequently (monthly or bi-quarterly depending on business thresholds).

Impact Consequence

Find relevant definitions for each category within your business. An example of Insignificant is an impact can be absorbed within the day-to-day business running costs where-as extreme might be contractual non-compliance or breach of legislation with penalties or fines over a considerably higher amount then perhaps major would have had and may also come with extreme brand, operational, financial or reputational impacts.

Implementation

Policies

Overall general statement produced by senior management

- high level statements of intent

- what expectations of correct usage

Standards

Refer to mandatory activities or rules

- regulatory compliance

- mandated based on compliance regime

- in US, Sarbanes-Oxley, HIPPA

- Basel Accords

- Montreal Protocol

Baselines

Template for comparison, measure deviation from normal

- standardized solution

- allows us to find and measure deviations

Guidelines

Recommendations

- not mandatory, optional

- best practices

Procedures

Step by step activities that need to be performed in a certain order, detailed instructions.

Business Continuity and Disaster Recovery

The goal of disaster recovery is to minimize the effects of a disaster

The goal of business continuity is to resume normal business operations as quickly as possible with the least amount of resources necessary to do so

Disaster Recovery Plan (DRP)

Carried out when everything is going to still be suffering from the effects of the disaster

Business Continuity Plan (BCP)

Used to return the business to normal operations

Business Continuity Management (BCM)

The process that is responsible for DRP and BCP.

Over-arching process

NIST SP800-34 Continuity Planning Guide for IT

- Develop the continuity planning policy statement

- Conduct the business impact analysis (BIA)

- Identify preventive controls

- Develop recovery strategies

- Develop the contingency plan

- Test the plan and conduct training and exercises

- Maintain the plan

BS25999 British Standard for Business Continuity Management

ISO 27031:2011 Business Continuity Planning

ISO 22301:2012 Business Continuity Management Systems

Department of Homeland Security BCP Kit BCP Policy Supplies the framework and governance for the BCP effort.

Contains:

- Scope

- Mission Statement

- Principles

- Guidelines

- Standards

Process to draft the policy

- Identify and document the components of the policy

- Identify and define existing policies that the BCP may affect

- Identify pertinent laws and standards

- Identify best practices

- Perform a GAP analysis

- Compose a draft of the new policy

- Internal review of the draft

- Incorporate feedback into draft

- Get approval of senior management

- Publish a final draft and distribute throughout organization

SWOT Analysis

- Strength

- Weakness

- Opportunity

- Threat

Business Continuity Planning Requirements

- Senior management support

- Management should be involved in setting the overall goals in continuity planning

Business Impact Analysis (BIA)

- Functional analysis

- Identifies which of the company's critical systems are needed for survival

- Estimates the down-time that can be tolerated by the system

- Maximum Tolerable Down-time (MTD)

- Maximum Period Time of Disruption (MPTD)

Steps:

- Select individuals to interview for data gathering

- Create data gathering tools

- Surveys

- Questionnaires

- Identify company's critical business functions

- Identify the resources these functions depend on

- Calculate how long these functions can survive without these resources

- Identify vulnerabilities and threats to these functions

- Calculate the risk for each different business function

- Document findings and report them to senior management

Goals:

- Determine criticality

- Estimate max downtime

- Evaluate internal and external resource requirements

Process

- Gather information

- Analyse information

- Perform threat analysis

- Document results and present recommendations

Disaster Recovery Planning

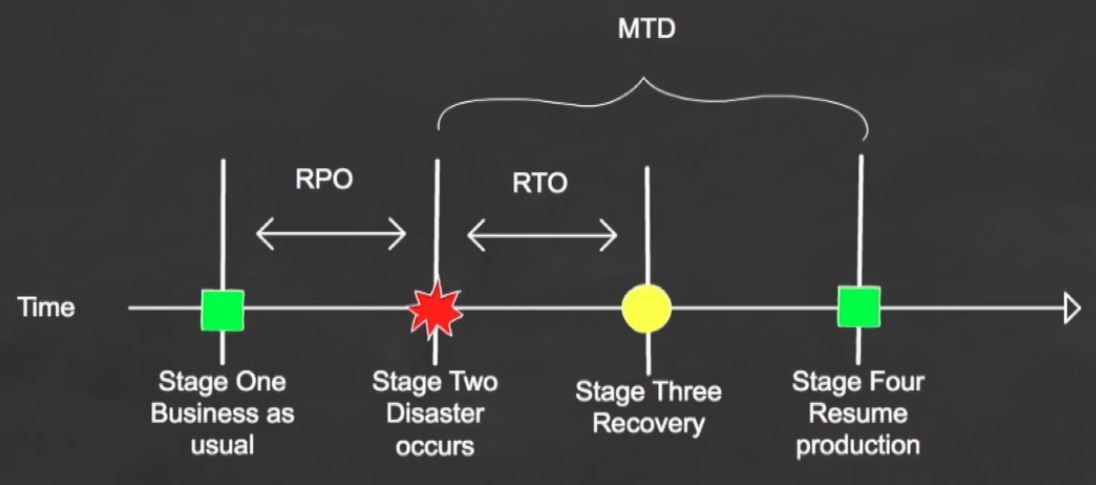

Stage 1 - Business as usual

Stage 2 - Disaster occurs

Stage 3 - Recovery

Stage 4 - Resume production

Recovery Time Objective (RTO)

- The earliest time period and a service level within which a business process must be restored after a disaster

- RTO value is smaller than the MTD because the MTD represents the time after which an inability to recover will mean severe damage to the business's reputation and/or the bottom line.

- The RTO assumes there is a period of acceptable down-time

Work Recovery Time (WRT)

- Remainder of the overall MTD value

Recovery Point Objective (RPO)

- Acceptable amount of data loss measured in time

MTD, RTO and RPO values are derived during the BIA

Risk Assessment

Looks at the impact and likely-hood to various threats to the business

Goals:

- Identify and document single points of failure

- Make a prioritized list of threats

- Gather information to develop risk control strategies

- Document acceptance of identified risks

- Document acknowledgment of risks that may not be addressed

Formula:

Risk = Threat * Impact * Probability

Main components:

- Review existing strategies for risk management

- Construct numerical scoring system for probability and impact

- Use numerical scoring to gauge effect of threats

- Estimate probability of threats

- Weigh each threat through the scoring system

- Calculate the risk by combining the scores of likelihood and impact of each threat

- Secure sponsor sign off on risk priorities

- Weigh appropriate measures

- Make sure planned measures do not heighten other risks

- Present assessment's findings to executive management

Certification vs Accreditation

Certification is the comprehensive technical evaluation of the security components and their compliance for the purpose of accreditation.

The goal of a certification process is to ensure that a system, product, or network is right for the customer’s purposes.

Accreditation is the formal acceptance of the adequacy of a system’s overall security and functionality by management.

Access Control

Access Control Systems

- RADIUS (not vendor specific)

- AAA

- UDP

- Encrypts only the password from client to server

- works over PPP

- TACACS/xTACACS/TACACS+

- TCP

- Encrypts all traffic

- works over multiple protocols

- Diameter

- supports PAP, CHAP, EAP

- replay protection

- end to end protection

- enhanced accounting

Content dependent access control

- focused on content of data

- data may be labeled, like HR or Finances

- dependent on the context of the usage of the data

Y = Control, X = Control Category, Color key = Control types

Threats to systems:

Maintenance hooks are a type of back door used by developers to get "back into" their code if necessary.

Preventive measures against back doors:

- Use a host intrusion detection system to watch for any attackers using back doors into the system.

- Use file system encryption to protect sensitive information.

- Implement auditing to detect any type of back door use.

A time-of-check/time-of-use (TOC/TOU) attack deals with the sequence of steps a system uses to complete a task. This type of attack takes advantage of the dependency on the timing of events that take place in a multitasking operating system. A TOC/TOU attack is when an attacker jumps in between two tasks and modifies something to control the result.

To protect against TOC/TOU attacks, the operating system can apply software locks to the items it will use when it is carrying out its “checking” tasks.

A race condition is when two different processes need to carry out their tasks on one resource. A race condition is an attack in which an attacker makes processes execute out of sequence to control the result.

To protect against race condition attacks, do not split up critical tasks that can have their sequence altered.

TCP/IP OSI Layer

Threats to Access Control

Defeating Access Controls

- Credentials can be mirrored

- Passwords can be brute forced

- Locks can be picked

- You must assess the weaknesses of your solution

Keystroke monitoring

Object reuse (data remnants)

- object disposal

Tempest Shielding

- Faraday Cage

White Noise

- Voice assistants (Alexa, Siri, ect) can be activated by white noise

Control Zone

- SCIF: A Sensitive Compartmented Information Facility which is a defense term for a secure room.

NIDS

Network Intrusion Detection Systems

- Real time traffic analysis

- Monitor though upload to logging server

- passive monitoring

- usually per subnet

HIDS/HIPS

Host-based Intrusion Detection/Prevention System

- Software based agent installed on machine

- not real time

- aggregated on schedule

How do they work

HIDS: Passive, do not stop attack, maybe send alert

HIPS: Active, can stop attack as well as send alert, respond and stop attack

- Signature Based

- Pattern Matching

- Must be kept up to date

- antivirus

- Stateful Matching

- Looks at sequences across traffic

- Building pattern based on traffic

- Must be kept up to date

- Anomaly Based

- looks at stream of traffic

- Look at expected behavior (rule based)

- Detects Abnormalities

- Could be statistical

- Look at raw traffic

- Could look at protocol Anomalies

- Heuristics

- Create virtual sandbox in memory

- Looks for abnormalities

Honeypot (honeynets)

- hacking magnet

- decoy

- honeynet.org

- enticement

Enticement

- legal

- let them make the decision to attack the system

Entrapment

- illegal

- trick them into attacking the system

Attacks against Honeypots

- Network Sniffer

- used to capture traffic

- Network monitor, etc.

- Dictionary attack

- Brute force attack

- Phishing

- Pharming

- Whaling

- Spear Phishing

- Vishing

Emanations Security

- Data radiating out via electrical/wireless signals

- TEMPEST shielding can help contain these signals

- Faraday cage

Intrusion Detection

IDS Types

- Signature Based

- Stateful

- Matches traffic patterns to activities

- Signature

- Matches individual packets to predefined definitions

- Regular updates required

- Cannot detect previously unknown attacks

- Stateful

- Anomaly Based

- Learns the normal traffic on the network and then spots exceptions

- Requires a "training" period to reduce false positives

- Also known as heuristic scanning

- Statistical detection

- Looks for traffic that is outside of the statistical norm

- Protocol detection

- Looks for protocols that are not typically in use on the network

- Traffic detection

- Looks for unusual activity inside of the traffic

- Rule Based

- Detects attack traffic through pre-defined rules

- Can be coupled with expert systems to be dynamic

- Cannot detect previously unknown attacks

Software Development Security

A system development life cycle (SDLC) is made up of the following basic components of each phase:

Initiation

- Need for a new system is defined; This phase addresses the questions, “What do we need and why do we need it?”

- A preliminary risk assessment should be carried out to develop an initial description of the confidentiality, integrity, and availability requirements of the system.

Acquisition/development

New system is either created or purchased

Activities during this phase will include:

- Requirements analysis

- In-depth study of what functions the company needs the desired system to carry out.

- Formal risk assessment

- Identifies vulnerabilities and threats in the proposed system and the potential risk levels as they pertain to confidentiality, integrity, and availability. This builds upon the initial risk assessment carried out in the previous phase. The results of this assessment help the team build the system’s security plan.

- Security functional requirements analysis

- Identifies the protection levels that must be provided by the system to meet all regulatory, legal, and policy compliance needs.

- Security assurance requirements analysis

- Identifies the assurance levels the system must provide. The activities that need to be carried out to ensure the desired level of confidence in the system are determined, which are usually specific types of tests and evaluations.

- Third-party evaluations

- Reviewing the level of service and quality a specific vendor will provide if the system is to be purchased.

- Security plan

- Documented security controls the system must contain to ensure compliance with the company’s security needs. This plan provides a complete description of the system and ties them to key company documents, as in configuration management, test and evaluation plans, system interconnection agreements, security accreditations, etc.

- Security test and evaluation plan

- Outlines how security controls should be evaluated before the system is approved and deployed.

Implementation

- New system is installed into production environment.

- A system should have baselines set pertaining to the system’s hardware, software, and firmware configuration during the implementation phase.

Operation/maintenance

- System is used and cared for.

- Continuous monitoring needs to take place to ensure that baselines are always met.

- Defined configuration management and change control procedures help to ensure that a system’s baseline is always met.

- Vulnerability assessments and penetration testing should also take place in this phase.

Disposal

- System is removed from production environment.

- Disposal activities need to ensure that an orderly termination of the system takes place and that all necessary data are preserved.

Software Development Life Cycle (SDLC)

The life cycle of software development deals with putting repeatable and predictable processes in place that help ensure functionality, cost, quality, and delivery schedule requirements are met.

Phases:

Requirements gathering

- Determine the why create this software, the what the software will do, and the for whom the software will be created.

- This is the phase when everyone involved attempts to understand why the project is needed and what the scope of the project entails.

- Security requirements

- Security risk assessment

- Privacy risk assessment

- Risk-level acceptance

Design

- Deals with how the software will accomplish the goals identified, which are encapsulated into a functional design.

- Attack surface analysis - identify and reduce the amount of code and functionality accessible to untrusted users.

- Threat modeling - systematic approach used to understand how different threats could be realized and how a successful compromise could take place.

Development

- Programming software code to meet specifications laid out in the design phase.

- The software design that was created in the previous phase is broken down into defined deliverables, and programmers develop code to meet the deliverable requirements.

Testing/Validation

- Validating software to ensure that goals are met and the software works as planned.

- It is important to map security risks to test cases and code.

- Tests are conducted in an environment that should mirror the production environment.

- Security attacks and penetration tests usually take place during this phase to identify any missed vulnerabilities.

- The most common testing approaches:

- Unit testing - Individual component is in a controlled environment where programmers validate data structure, logic, and boundary conditions.

- Integration testing - Verifying that components work together as outlined in design specifications.

- Acceptance testing - Ensuring that the code meets customer requirements.

- Regression testing - After a change to a system takes place, retesting to ensure functionality, performance, and protection.

- Fuzzing is a technique used to discover flaws and vulnerabilities in software. Fuzzing is the act of sending random data to the target program in order to trigger failures.

- Dynamic analysis refers to the evaluation of a program in real time, i.e., when it is running. Dynamic analysis is carried out once a program has cleared the static analysis stage and basic programming flaws have been rectified offline.

- Static analysis a debugging technique that is carried out by examining the code without executing the program, and therefore is carried out before the program is compiled.

Release/Maintenance

- Deploying the software and then ensuring that it is properly configured, patched, and monitored.

Statement of Work (SOW)

Describes the product and customer requirements. A detailed-oriented SOW will help ensure that these requirements are properly understood and assumptions are not made.

Work breakdown structure (WBS)

A project management tool used to define and group a project’s individual work elements in an organized manner.

Privacy Impact Rating

Indicates the sensitivity level of the data that will be processed or made accessible.

Computer-aided software engineering (CASE)

Refers to software that allows for the automated development of software, which can come in the form of program editors, debuggers, code analyzers, version-control mechanisms, and more.

Verification

Determines if the product accurately represents and meets the specifications.

Validation

Determines if the product provides the necessary solution for the intended real-world problem.

Software Development Models

Build and Fix Model

Development takes place immediately with little or no planning involved. Problems are dealt with as they occur, which is usually after the software product is released to the customer.

Waterfall Model

A linear-sequential life-cycle approach. Each phase must be completed in its entirety before the next phase can begin. At the end of each phase, a review takes place to make sure the project is on the correct path and if the project should continue.

In this model all requirements are gathered in the initial phase and there is no formal way to integrate changes as more information becomes available or requirements change.

V-Shaped Model (V-Model)

Follows steps that are laid out in a V format.

This model emphasizes the verification and validation of the product at each phase and provides a formal method of developing testing plans as each coding phase is executed.

Each phase must be completed before the next phase begins.

Prototyping

A sample of software code or a model (prototype) can be developed to explore a specific approach to a problem before investing expensive time and resources.

Rapid prototyping is an approach that allows the development team to quickly create a prototype (sample) to test the validity of the current understanding of the project requirements.

Evolutionary prototypes are developed, with the goal of incremental improvement.

Incremental Model

Each incremental phase results in a deliverable that is an operational product. This means that a working version of the software is produced after the first iteration and that version is improved upon in each of the subsequent iterations.

Spiral Model

Uses an iterative approach to software development and places emphasis on risk analysis. The model is made up of four main phases: planning, risk analysis, development and test, and evaluation.

Rapid Application Development

Relies more on the use of rapid prototyping instead of extensive upfront planning.

Combines the use of prototyping and iterative development procedures with the goal of accelerating the software development process.

Agile Model

Focuses on incremental and iterative development methods that promote cross-functional teamwork and continuous feedback mechanisms.

The Agile model does not use prototypes to represent the full product, but breaks the product down into individual features.

Capability Maturity Model Integration (CMMI)

- Initial Development process is ad hoc or even chaotic. The company does not use effective management procedures and plans. There is no assurance of consistency, and quality is unpredictable.

- Repeatable A formal management structure, change control, and quality assurance are in place. The company can properly repeat processes throughout each project. The company does not have formal process models defined.

- Defined Formal procedures are in place that outline and define processes carried out in each project. The organization has a way to allow for quantitative process improvement.

- Managed The company has formal processes in place to collect and analyze quantitative data, and metrics are defined and fed into the process improvement program.

- Optimizing The company has budgeted and integrated plans for continuous process improvement.

Software escrow

Storing of the source code of software with a third-party escrow agent. The software source code is released to the licensee if the licensor (software vendor) files for bankruptcy or fails to maintain and update the software product as promised in the software license agreement.

Programming Languages and Concepts:

- (1st generation programming language) - Machine language is in a format that the computer’s processor can understand and work with directly.

- (2nd generation programming language) - Assembly language is considered a low-level programming language and is the symbolic representation of machine-level instructions. It is “one step above” machine language. It uses symbols (called mnemonics) to represent complicated binary codes. Programs written in assembly language are also hardware specific.

- (3rd generation programming language) - High-level languages use abstract statements. Abstraction naturalized multiple assembly language instructions into a single high-level statement, e.g., the IF – THEN – ELSE. High-level languages are processor independent. Code written in a high-level language can be converted to machine language for different processor architectures using compilers and interpreters.

- (4th generation programming language) - Very high-level languages focus on highly abstract algorithms that allow straightforward programming implementation in specific environments.

- (5th generation programming language) - Natural languages have the ultimate target of eliminating the need for programming expertise and instead use advanced knowledge-based processing and artificial intelligence.

Assemblers

Tools that convert assembly language source code into machine code.

Compilers

Tools that convert high-level language statements into the necessary machine-level format (.exe, .dll, etc.) for specific processors to understand.

Interpreters

Tools that convert code written in interpreted languages to the machine-level format for processing.

Garbage collector

Identifies blocks of memory that were once allocated but are no longer in use and deallocates the blocks and marks them as free.

Object Oriented Programming (OOP)

Works with classes and objects.

A method is the functionality or procedure an object can carry out.

Data hiding is provided by encapsulation, which protects an object’s private data from outside access. No object should be allowed to, or have the need to, access another object’s internal data or processes.

Abstraction

The capability to suppress unnecessary details so the important, inherent properties can be examined and reviewed.

Polymorphism

Two objects can receive the same input and have different outputs.

Data modeling

Considers data independently of the way the data are processed and of the components that process the data. A process used to define and analyze data requirements needed to support the business processes.

Cohesion

A measurement that indicates how many different types of tasks a module needs to carry out.

Coupling

A measurement that indicates how much interaction one module requires for carrying out its tasks.

Data structure

A representation of the logical relationship between elements of data.

Distributed Computing Environment (DCE)

The first framework and development toolkit for developing client/server applications to allow for distributed computing.

Common Object Request Broker Architecture (CORBA)

Open objectoriented standard architecture developed by the Object Management Group (OMG). The standards enable software components written in different computer languages and running on different systems to communicate.

Object request broker (ORB)

Manages all communications between components and enables them to interact in a heterogeneous and distributed environment. The ORB acts as a “broker” between a client request for a service from a distributed object and the completion of that request.

Component Object Model (COM)

A model developed by Microsoft that allows for interprocess communication between applications potentially written in different programming languages on the same computer system.

Object linking and embedding (OLE)

Provides a way for objects to be shared on a local computer and to use COM as their foundation. It is a technology developed by Microsoft that allows embedding and linking to documents and other objects.

Java Platform, Enterprise Edition (J2EE)

Is based upon the Java programming language, which allows a modular approach to programming code with the goal of interoperability. J2EE defines a client/server model that is object oriented and platform independent.

Service-oriented architecture (SOA)

Provides standardized access to the most needed services to many different applications at one time. Service interactions are self-contained and loosely coupled, so that each interaction is independent of any other interaction.

Simple Object Access Protocol (SOAP)

An XML-based protocol that encodes messages in a web service environment.

Mashup

The combination of functionality, data, and presentation capabilities of two or more sources to provide some type of new service or functionality.

Software as a Service (SAAS)

A software delivery model that allows applications and data to be centrally hosted and accessed by thin clients, commonly web browsers. A common delivery method of cloud computing.

Cloud computing

A method of providing computing as a service rather than as a physical product. It is Internet-based computing, whereby shared resources and software are provided to computers and other devices on demand.

Mobile code

Code that can be transmitted across a network, to be executed by a system or device on the other end.

Java applets

Small components (applets) that provide various functionalities and are delivered to users in the form of Java bytecode. Java applets can run in a web browser using a Java Virtual Machine (JVM). Java is platform independent; thus, Java applets can be executed by browsers for many platforms.

Sandbox

A virtual environment that allows for very fine-grained control over the actions that code within the machine is permitted to take. This is designed to allow safe execution of untrusted code from remote sources.

ActiveX

A Microsoft technology composed of a set of OOP technologies and tools based on COM and DCOM. It is a framework for defining reusable software components in a programming language–independent manner.

Authenticode

A type of code signing, which is the process of digitally signing software components and scripts to confirm the software author and guarantee that the code has not been altered or corrupted since it was digitally signed. Authenticode is Microsoft’s implementation of code signing.

Threats for Web Environments

Information gathering

Usually the first step in an attacker’s methodology, in which the information gathered may allow an attacker to infer additional information that can be used to compromise systems.

Server side includes (SSI)

An interpreted server-side scripting language used almost exclusively for web-based communication. It is commonly used to include the contents of one or more files into a web page on a web server. Allows web developers to reuse content by inserting the same content into multiple web documents.

Client-side validation

- Input validation is done at the client before it is even sent back to the server to process.

- Path or directory traversal - Attack is also known as the “dot dot slash” because it is perpetrated by inserting the characters “../” several times into a URL to back up or traverse into directories that were not supposed to be accessible from the Web.

- Unicode encoding - An attacker using Unicode could effectively make the same directory traversal request without using “/” but with any of the Unicode representations of that character (three exist: %c1%1c, %c0%9v, and %c0%af)

- URL Encoding - %20% = a space

Cross-site scripting (XSS)

- An attack where a vulnerability is found on a web site that allows an attacker to inject malicious code into a web application.

- There are three different XSS vulnerabilities:

- Nonpersistent XSS vulnerabilities, or reflected vulnerabilities, occur when an attacker tricks the victim into processing a URL programmed with a rogue script to steal the victim’s sensitive information (cookie, session ID, etc.). The principle behind this attack lies in exploiting the lack of proper input or output validation on dynamic web sites.

- Persistent XSS vulnerabilities, also known as stored or second order vulnerabilities, are generally targeted at web sites that allow users to input data which are stored in a database or any other such location, e.g., forums, message boards, guest books, etc. The attacker posts some text that contains some malicious JavaScript, and when other users later view the posts, their browsers render the page and execute the attackers JavaScript.

- DOM (Document Object Model)–based XSS vulnerabilities are also referred to as local cross-site scripting. DOM is the standard structure layout to represent HTML and XML documents in the browser. In such attacks the document components such as form fields and cookies can be referenced through JavaScript. The attacker uses the DOM environment to modify the original client-side JavaScript. This causes the victim’s browser to execute the resulting abusive JavaScript code.

Parameter validation

The values that are being received by the application are validated to be within defined limits before the server application processes them within the system.

Web proxy

A piece of software installed on a system that is designed to intercept all traffic between the local web browser and the web server.

Replay attack

An attacker capturing the traffic from a legitimate session and replaying it with the goal of masquerading an authenticated user.

Database Management Software

A database is a collection of data stored in a meaningful way that enables multiple users and applications to access, view, and modify data as needed.

Any type of database should have the following characteristics:

- It centralizes by not having data held on several different servers throughout the network.

- It allows for easier backup procedures.

- It provides transaction persistence.

- It allows for more consistency since all the data are held and maintained in one central location.

- It provides recovery and fault tolerance.

- It allows the sharing of data with multiple users.

- It provides security controls that implement integrity checking, access control, and the necessary level of confidentiality.

Transaction persistence means the database procedures carrying out transactions are durable and reliable. The state of the database’s security should be the same after a transaction has occurred, and the integrity of the transaction needs to be ensured.

Database Models

- Relational: uses attributes (columns) and tuples (rows) to contain and organize information. It presents information in the form of tables.

- Hierarchical: combines records and fields that are related in a logical tree structure. The structure and relationship between the data elements are different from those in a relational database. In the hierarchical database the parents can have one child, many children, or no children. The most commonly used implementation of the hierarchical model is in the Lightweight Directory Access Protocol (LDAP) model.

- Network: allows each data element to have multiple parent and child records. This forms a redundant network-like structure instead of a strict tree structure.

- Object-oriented: is designed to handle a variety of data types (images, audio, documents, video).

- Object-relational: - a relational database with a software front end that is written in an object-oriented programming language.

- Record: A collection of related data items.

- File: A collection of records of the same type.

- Database: A cross-referenced collection of data.

- DBMS: Manages and controls the database.

- Tuple: A row in a two-dimensional database.

- Attribute: A column in a two-dimensional database.

- Primary key: Columns that make each row unique. (Every row of a table must include a primary key.)

- View: A virtual relation defined by the database administrator in order to keep subjects from viewing certain data.

- Foreign key: An attribute of one table that is related to the primary key of another table.

- Cell: An intersection of a row and a column.

- Schema: Defines the structure of the database.

- Data dictionary: Central repository of data elements and their relationships.

- Rollback: An operation that ends a current transaction and cancels all the recent changes to the database until the previous checkpoint/commit point.

- Two-phase commit: A mechanism that is another control used in databases to ensure the integrity of the data held within the database.

- Cell suppression: A technique used to hide specific cells that contain sensitive information.

- Noise and perturbation: A technique of inserting bogus information in the hopes of misdirecting an attacker or confusing the matter enough that the actual attack will not be fruitful.

- Data warehousing: Combines data from multiple databases or data sources into a large database for the purpose of providing more extensive information retrieval and data analysis.

- Data mining: The process of massaging the data held in the data warehouse into more useful information.

Database Programming Interfaces

- Open Database Connectivity (ODBC) An API that allows an application to communicate with a database, either locally or remotely

- Object Linking and Embedding Database (OLE DB) Separates data into components that run as middleware on a client or server. It provides a lowlevel interface to link information across different databases, and provides access to data no matter where they are located or how they are formatted.

- ActiveX Data Objects (ADO) An API that allows applications to access back-end database systems. It is a set of ODBC interfaces that exposes the functionality of data sources through accessible objects. ADO uses the OLE DB interface to connect with the database, and can be developed with many different scripting languages.

- Java Database Connectivity (JDBC) An API that allows a Java application to communicate with a database. The application can bridge through ODBC or directly to the database.

Data definition language (DDL)

Defines the structure and schema of the database.

Data manipulation language (DML)

Contains all the commands that enable a user to view, manipulate, and use the database (view, add, modify, sort, and delete commands).

Query language (QL)

Enables users to make requests of the database.

Integrity:

Database software performs three main types of integrity services: semantic, referential, and entity.

- A semantic integrity mechanism makes sure structural and semantic rules are enforced. These rules pertain to data types, logical values, uniqueness constraints, and operations that could adversely affect the structure of the database.

- A database has referential integrity if all foreign keys reference existing primary keys. There should be a mechanism in place that ensures no foreign key contains a reference to a primary key of a nonexisting record, or a null value.

- Entity integrity guarantees that the tuples are uniquely identified by primary key values.

Polyinstantiation

This enables a table that contains multiple tuples with the same primary keys, with each instance distinguished by a security level.

Online transaction processing (OLTP)

Used when databases are clustered to provide fault tolerance and higher performance. The main goal of OLTP is to ensure that transactions happen properly or they don’t happen at all.

The ACID test:

- Atomicity: Divides transactions into units of work and ensures that all modifications take effect or none takes effect. Either the changes are committed or the database is rolled back.

- Consistency: A transaction must follow the integrity policy developed for that particular database and ensure all data are consistent in the different databases.

- Isolation: Transactions execute in isolation until completed, without interacting with other transactions. The results of the modification are not available until the transaction is completed.

- Durability: Once the transaction is verified as accurate on all systems, it is committed and the databases cannot be rolled back.

Expert systems

Use artificial intelligence (AI) to solve complex problems. They are systems that emulate the decision-making ability of a human expert.

Inference engine

A computer program that tries to derive answers from a knowledge base. It is the “brain” that expert systems use to reason about the data in the knowledge base for the ultimate purpose of formulating new conclusions.

Rule-based programming

A common way of developing expert systems, with rules based on if-then logic units, and specifying a set of actions to be performed for a given situation.

Artificial neural network (ANN)

A mathematical or computational model based on the neural structure of the brain.

Malware Types:

Virus

A small application, or string of code, that infects host applications. It is a programming code that can replicate itself and spread from one system to another.

Macro virus

A virus written in a macro language and that is platform independent. Since many applications allow macro programs to be embedded in documents, the programs may be run automatically when the document is opened. This provides a distinct mechanism by which viruses can be spread.

Compression viruses

Another type of virus that appends itself to executables on the system and compresses them by using the user’s permissions.

Stealth virus

A virus that hides the modifications it has made. The virus tries to trick antivirus software by intercepting its requests to the operating system and providing false and bogus information.

Polymorphic virus

Produces varied but operational copies of itself. A polymorphic virus may have no parts that remain identical between infections, making it very difficult to detect directly using signatures.

Multipart virus

Also called a multipartite virus, this has several components to it and can be distributed to different parts of the system. It infects and spreads in multiple ways, which makes it harder to eradicate when identified.

Self-garbling virus

Attempts to hide from antivirus software by modifying its own code so that it does not match predefined signatures.

Meme viruses

These are not actual computer viruses, but types of e-mail messages that are continually forwarded around the Internet.

Bots

Software applications that run automated tasks over the Internet, which perform tasks that are both simple and structurally repetitive. Malicious use of bots is the coordination and operation of an automated attack by a botnet (centrally controlled collection of bots).

Worms

These are different from viruses in that they can reproduce on their own without a host application and are self-contained programs.

Logic bomb

Executes a program, or string of code, when a certain event happens or a date and time arrives.

Rootkit

Set of malicious tools that are loaded on a compromised system through stealthy techniques. The tools are used to carry out more attacks either on the infected systems or surrounding systems.

Trojan horse

A program that is disguised as another program with the goal of carrying out malicious activities in the background without the user knowing.

Remote access Trojans (RATs)

Malicious programs that run on systems and allow intruders to access and use a system remotely.

Immunizer

Attaches code to the file or application, which would fool a virus into “thinking” it was already infected.

Behavior blocking

Allowing the suspicious code to execute within the operating system and watches its interactions with the operating system, looking for suspicious activities.

Security Architecture and Design

Stakeholders for a system are the users, operators, maintainers, developers, and suppliers.

Computer architecture encompasses all of the parts of a computer system that are necessary for it to function, including the operating system, memory chips, logic circuits, storage devices, input and output devices, security components, buses, and networking interfaces.

ISO/IEC 42010:2007

International standard that provides guidelines on how to create and maintain system architectures.

Central processing unit (CPU)

Carries out the execution of instructions within a computer.

Arithmetic logic unit (ALU)

Component of the CPU that carries out logic and mathematical functions as they are laid out in the programming code being processed by the CPU.

Register

Small, temporary memory storage units integrated and used by the CPU during its processing functions.

Control unit

Part of the CPU that oversees the collection of instructions and data from memory and how they are passed to the processing components of the CPU.

General registers

Temporary memory location the CPU uses during its processes of executing instructions. The ALU’s “scratch pad” it uses while carrying out logic and math functions.

Special registers

Temporary memory location that holds critical processing parameters. They hold values as in the program counter, stack pointer, and program status word.

Program counter

Holds the memory address for the following instructions the CPU needs to act upon.

Stack

Memory segment used by processes to communicate instructions and data to each other.

Program status word

Condition variable that indicates to the CPU what mode (kernel or user) instructions need to be carried out in.

User mode (problem state)

Protection mode that a CPU works within when carrying out less trusted process instructions.

Kernel mode (supervisory state, privilege mode)

Mode that a CPU works within when carrying out more trusted process instructions. The process has access to more computer resources when working in kernel versus user mode.

Address bus

Physical connections between processing components and memory segments used to communicate the physical memory addresses being used during processing procedures.

Data bus

Physical connections between processing components and memory segments used to transmit data being used during processing procedures.

Symmetric mode multiprocessing

When a computer has two or more CPUs and each CPU is being used in a load-balancing method.

Asymmetric mode multiprocessing

When a computer has two or more CPUs and one CPU is dedicated to a specific program while the other CPUs carry out general processing procedures.

Process

Program loaded in memory within an operating system.

Multiprogramming

Interleaved execution of more than one program (process) or task by a single operating system.

Multitasking

Simultaneous execution of more than one program (process) or task by a single operating system.

Cooperative multitasking

Multitasking scheduling scheme used by older operating systems to allow for computer resource time slicing. Processes had too much control over resources, which would allow for the programs or systems to “hang.”

Preemptive multitasking

Multitasking scheduling scheme used by operating systems to allow for computer resource time slicing. Used in newer, more stable operating systems.

Process states (ready, running, blocked)

Processes can be in various activity levels. Ready = waiting for input. Running = instructions being executed by CPU. Blocked = process is “suspended.”

Interrupts

Values assigned to computer components (hardware and software) to allow for efficient computer resource time slicing.

Maskable interrupt

Interrupt value assigned to a noncritical operating system activity.

Nonmaskable interrupt

Interrupt value assigned to a critical operating system activity.

Thread

Instruction set generated by a process when it has a specific activity that needs to be carried out by an operating system. When the activity is finished, the thread is destroyed.

Multithreading

Applications that can carry out multiple activities simultaneously by generating different instruction sets (threads).

Software deadlock

Two processes cannot complete their activities because they are both waiting for system resources to be released.

Process isolation

Protection mechanism provided by operating systems that can be implemented as encapsulation, time multiplexing of shared resources, naming distinctions, and virtual memory mapping.

When a process is encapsulated, no other process understands or interacts with its internal programming code.

Encapsulation provides data hiding, which means that outside software components will not know how a process works and will not be able to manipulate the process’s internal code. This is an integrity mechanism and enforces modularity in programming code.

Time multiplexing

A technology that allows processes to use the same resources.

Virtual address mapping

Allows the different processes to have their own memory space. The memory manager ensures no processes improperly interact with another process’s memory. This provides integrity and confidentiality for the individual processes and their data and an overall stable processing environment for the operating system.

The goals of memory management are to:

- Provide an abstraction level for programmers

- Maximize performance with the limited amount of memory available

- Protect the operating system and applications loaded into memory

The memory manager has five basic responsibilities:

-

Relocation

- Swap contents from RAM to the hard drive as needed

- Provide pointers for applications if their instructions and memory segment have been moved to a different location in main memory

-

Protection

- Limit processes to interact only with the memory segments assigned to them

- Provide access control to memory segments

-

Sharing

- Use complex controls to ensure integrity and confidentiality when processes need to use the same shared memory segments

- Allow many users with different levels of access to interact with the same application running in one memory segment

-

Logical organization

- Segment all memory types and provide an addressing scheme for each at an abstraction level

- Allow for the sharing of specific software modules, such as dynamic link library (DLL) procedures

-

Physical organization

- Segment the physical memory space for application and operating system processes

Dynamic link libraries (DLLs)

A set of subroutines that are shared by different applications and operating system processes.

Base registers

Beginning of address space assigned to a process. Used to ensure a process does not make a request outside its assigned memory boundaries.

Limit registers

Ending of address space assigned to a process. Used to ensure a process does not make a request outside its assigned memory boundaries.

Memory Protection Issues:

- Every address reference is validated for protection.

- Two or more processes can share access to the same segment with potentially different access rights.

- Different instruction and data types can be assigned different levels of protection.

- Processes cannot generate an unpermitted address or gain access to an unpermitted segment

RAM

Memory sticks that are plugged into a computer’s motherboard and work as volatile memory space for an operating system.

Additional types of RAM you should be familiar with:

- Synchronous DRAM (SDRAM) Synchronizes itself with the system’s CPU and synchronizes signal input and output on the RAM chip. It coordinates its activities with the CPU clock so the timing of the CPU and the timing of the memory activities are synchronized. This increases the speed of transmitting and executing data.

- Extended data out DRAM (EDO DRAM) This is faster than DRAM because DRAM can access only one block of data at a time, whereas EDO DRAM can capture the next block of data while the first block is being sent to the CPU for processing. It has a type of “look ahead” feature that speeds up memory access.

- Burst EDO DRAM (BEDO DRAM) Works like (and builds upon) EDO DRAM in that it can transmit data to the CPU as it carries out a read option, but it can send more data at once (burst). It reads and sends up to four memory addresses in a small number of clock cycles.

- Double data rate SDRAM (DDR SDRAM) Carries out read operations on the rising and falling cycles of a clock pulse. So instead of carrying out one operation per clock cycle, it carries out two and thus can deliver twice the throughput of SDRAM. Basically, it doubles the speed of memory activities, when compared to SDRAM, with a smaller number of clock cycles.

Thrashing

When a computer spends more time moving data from one small portion of memory to another than actually processing the data.

ROM

Nonvolatile memory that is used on motherboards for BIOS functionality and various device controllers to allow for operating system-to-device communication. Sometimes used for off-loading graphic rendering or cryptographic functionality.

Types of ROM:

- Programmable read-only memory (PROM) is a form of ROM that can be modified after it has been manufactured. PROM can be programmed only one time because the voltage that is used to write bits into the memory cells actually burns out the fuses that connect the individual memory cells.

- Erasable programmable read-only memory (EPROM) can be erased, modified, and upgraded. EPROM holds data that can be electrically erased or written to.

- Flash memory is a special type of memory that is used in digital cameras, BIOS chips, memory cards, and video game consoles. It is a solid-state technology, meaning it does not have moving parts and is used more as a type of hard drive than memory.

Hardware segmentation

Physically mapping software to individual memory segments.

Cache memory

Fast and expensive memory type that is used by a CPU to increase read and write operations.

Absolute addresses

Hardware addresses used by the CPU.

Logical addresses

Indirect addressing used by processes within an operating system. The memory manager carries out logical-to-absolute address mapping.

Stack

Memory construct that is made up of individually addressable buffers. Process-to-process communication takes place through the use of stacks.

Buffer overflow

Too much data is put into the buffers that make up a stack. Common attack vector used by hackers to run malicious code on a target system.

Bounds checking

Ensuring the inputted data are of an acceptable length.

Address space layout randomization (ASLR)

Memory protection mechanism used by some operating systems. The addresses used by components of a process are randomized so that it is harder for an attacker to exploit specific memory vulnerabilities.

Data execution prevention (DEP)

Memory protection mechanism used by some operating systems. Memory segments may be marked as nonexecutable so that they cannot be misused by malicious software.

Garbage collector

Tool that marks unused memory segments as usable to ensure that an operating system does not run out of memory. Used to protect against memory leaks.

Virtual memory

Combination of main memory (RAM) and secondary memory within an operating system.

Interrupt

Software or hardware signal that indicates that system resources (i.e., CPU) are needed for instruction processing.

Programmable I/O

The CPU sends data to an I/O device and polls the device to see if it is ready to accept more data.

Interrupt-driven I/O

The CPU sends a character over to the printer and then goes and works on another process’s request.

I/O using Direct memory access (DMA)

A way of transferring data between I/O devices and the system’s memory without using the CPU.

Premapped I/O

The CPU sends the physical memory address of the requesting process to the I/O device, and the I/O device is trusted enough to interact with the contents of memory directly, so the CPU does not control the interactions between the I/O device and memory.

Fully Mapped I/O

Under fully mapped I/O, the operating system does not trust the I/O device.

Instruction set

Set of operations and commands that can be implemented by a particular processor (CPU).

Microarchitecture

Specific design of a microprocessor, which includes physical components (registers, logic gates, ALU, cache, etc.) that support a specific instruction set.

Ring-based architecture (Protection Rings)

Mechanisms to protect data and functionality from faults (by improving fault tolerance) and malicious behaviour through two or more hierarchical levels or layers of privilege within the architecture of a computer system

Application programming interface

Software interface that enables process-to-process interaction. Common way to provide access to standard routines to a set of software programs.

Monolithic operating system architecture

All of the code of the operating system working in kernel mode in an ad hoc and nonmodularized manner.

Layered operating system architecture

Architecture that separates system functionality into hierarchical layers.

Data hiding

Use of segregation in design decisions to protect software components from negatively interacting with each other. Commonly enforced through strict interfaces.

Microkernel architecture

Reduced amount of code running in kernel mode carrying out critical operating system functionality. Only the absolutely necessary code runs in kernel mode, and the remaining operating system code runs in user mode.

Hybrid microkernel architecture

Combination of monolithic and microkernel architectures. The microkernel carries out critical operating system functionality, and the remaining functionality is carried out in a client\server model within kernel mode.

Mode transition

When the CPU has to change from processing code in user mode to kernel mode. This is a protection measure, but it causes a performance hit.

Virtualization

Creation of a simulated environment (hardware platform, operating system, storage, etc.) that allows for central control and scalability.

Hypervisor

Central program used to manage virtual machines (guests) within a simulated environment (host).

Security policy

Strategic tool used to dictate how sensitive information and resources are to be managed and protected.

Trusted computing base

A collection of all the hardware, software, and firmware components within a system that provide security and enforce the system’s security policy.

Trusted path

Trustworthy software channel that is used for communication between two processes that cannot be circumvented.

Security perimeter

Mechanism used to delineate between the components within and outside of the trusted computing base.

Reference monitor

Concept that defines a set of design requirements of a reference validation mechanism (security kernel), which enforces an access control policy over subjects’ (processes, users) ability to perform operations (read, write, execute) on objects (files, resources) on a system.

Security kernel

Hardware, software, and firmware components that fall within the TCB and implement and enforce the reference monitor concept.

The security kernel has three main requirements:

- It must provide isolation for the processes carrying out the reference monitor concept, and the processes must be tamperproof.

- It must be invoked for every access attempt and must be impossible to circumvent. Thus, the security kernel must be implemented in a complete and foolproof way.

- It must be small enough to be tested and verified in a complete and comprehensive manner.

Multilevel security policies

Outlines how a system can simultaneously process information at different classifications for users with different clearance levels.

State machine models

- To verify the security of a system, the state is used, which means that all current permissions and all current instances of subjects accessing objects must be captured.

- A system that has employed a state machine model will be in a secure state in each and every instance of its existence.

Security Models

Bell-LaPadula model:

This is the first mathematical model of a multilevel security policy that defines the concept of a secure state and necessary modes of access. It ensures that information only flows in a manner that does not violate the system policy and is confidentiality focused.

- The simple security rule

- A subject cannot read data at a higher security level (no read up).

- The *-property rule

- A subject cannot write to an object at a lower security level (no write down).

- The strong star property rule

- A subject can perform read and write functions only to the objects at its same security level.

Biba model

A formal state transition model that describes a set of access control rules designed to ensure data integrity.

- The simple integrity axiom A subject cannot read data at a lower integrity level (no read down).

- The *-integrity axiom A subject cannot modify an object in a higher integrity level (no write up).

Clark-Wilson model

This integrity model is implemented to protect the integrity of data and to ensure that properly formatted transactions take place. It addresses all three goals of integrity:

- Subjects can access objects only through authorized programs (access triple).

- Separation of duties is enforced.

- Auditing is required.

Brewer and Nash model

This model allows for dynamically changing access controls that protect against conflicts of interest. Also known as the Chinese Wall model.

Security Modes

Dedicated Security Mode All users must have:

- Proper clearance for all information on the system

- Formal access approval for all information on the system

- A signed NDA for all information on the system

- A valid need-to-know for all information on the system

- All users can access all data.

System High-Security Mode All users must have:

- Proper clearance for all information on the system

- Formal access approval for all information on the system

- A signed NDA for all information on the system

- A valid need-to-know for some information on the system

- All users can access some data, based on their need-to-know.

Compartmented Security Mode All users must have:

- Proper clearance for the highest level of data classification on the system

- Formal access approval for some information on the system

- A signed NDA for all information they will access on the system

- A valid need-to-know for some of the information on the system

- All users can access some data, based on their need-to-know and formal access approval.

Multilevel Security Mode All users must have:

- Proper clearance for some of the information on the system

- Formal access approval for some of the information on the system

- A signed NDA for all information on the system

- A valid need-to-know for some of the information on the system

- All users can access some data, based on their need-to-know, clearance, and formal access approval.

The Common Criteria

Under the Common Criteria model, an evaluation is carried out on a product and it is assigned an Evaluation Assurance Level (EAL).

- EAL1 Functionally tested

- EAL2 Structurally tested

- EAL3 Methodically tested and checked

- EAL4 Methodically designed, tested, and reviewed

- EAL5 Semiformally designed and tested

- EAL6 Semiformally verified design and tested

- EAL7 Formally verified design and tested

The Common Criteria uses protection profiles in its evaluation process.

A protection profile contains the following five sections:

- Descriptive elements

- Provides the name of the profile and a description of the security problem to be solved.

- Rationale

- Justifies the profile and gives a more detailed description of the real-world problem to be solved. The environment, usage assumptions, and threats are illustrated along with guidance on the security policies that can be supported by products and systems that conform to this profile.

- Functional requirements

- Establishes a protection boundary, meaning the threats or compromises within this boundary to be countered. The product or system must enforce the boundary established in this section.

- Development assurance requirements

- Identifies the specific requirements the product or system must meet during the development phases, from design to implementation.

- Evaluation assurance requirements

- Establishes the type and intensity of the evaluation.

Systems Evaluation Methods

- In the Trusted Computer System Evaluation Criteria (TCSEC), commonly known as the Orange Book, the lower assurance level ratings look at a system’s protection mechanisms and testing results to produce an assurance rating, but the higher assurance level ratings look more at the system design, specifications, development procedures, supporting documentation, and testing results.

- An assurance evaluation examines the security-relevant parts of a system, meaning the TCB, access control mechanisms, reference monitor, kernel, and protection mechanisms. The relationship and interaction between these components are also evaluated.

- The Orange Book was used to evaluate whether a product contained the security properties the vendor claimed it did and whether the product was appropriate for a specific application or function.

- TCSEC addresses confidentiality, but not integrity. Functionality of the security mechanisms and the assurance of those mechanisms are not evaluated separately, but rather are combined and rated as a whole

- TCSEC provides a classification system that is divided into hierarchical divisions of assurance levels:

- A. Verified protection

- B. Mandatory protection

- B1: Labeled Security

- Each data object must contain a classification label and each subject must have a clearance label. When a subject attempts to access an object,

the system must compare the subject’s and object’s security labels to ensure the requested actions are acceptable. Data leaving the system must also contain an accurate

security label. The security policy is based on an informal statement, and the design specifications are reviewed and verified.

B2: Structured Protection - The security policy is clearly defined and documented, and the system design and implementation are subjected to more thorough review and testing procedures. This class requires more stringent authentication mechanisms and well-defined interfaces among layers. Subjects and devices require labels, and the system must not allow covert channels.

B3: Security Domains - In this class, more granularity is provided in each protection mechanism, and the programming code that is not necessary to support the security policy is excluded.

- Each data object must contain a classification label and each subject must have a clearance label. When a subject attempts to access an object,

- C. Discretionary protection

- C1: Discretionary Security Protection

- Discretionary access control is based on individuals and/or groups. It requires a separation of users and information, and identification and authentication of individual entities.

- C2: Controlled Access Protection

- Users need to be identified individually to provide more precise access control and auditing functionality. Logical access control mechanisms are used to enforce authentication and the uniqueness of each individual’s identification.

- D. Minimal security

- D1: The classes with higher numbers offer a greater degree of trust and assurance. The criteria breaks down into seven different areas:

- Security policy The policy must be explicit and well defined and enforced by the mechanisms within the system.

- Identification Individual subjects must be uniquely identified.

- Labels Access control labels must be associated properly with objects.

- Documentation Documentation must be provided, including test, design, and specification documents, user guides, and manuals.

- Accountability Audit data must be captured and protected to enforce accountability.

- Life-cycle assurance Software, hardware, and firmware must be able to be tested individually to ensure that each enforces the security policy in an effective manner throughout their lifetimes.

- Continuous protection The security mechanisms and the system as a whole must perform predictably and acceptably in different situations continuously.

- The Red Book is referred to as the Trusted Network Interpretation (TNI). The following is a brief overview of the security items addressed in the Red Book:

- Communication integrity

- Authentication Protects against masquerading and playback attacks. Mechanisms include digital signatures, encryption, timestamp, and passwords.

- Message integrity Protects the protocol header, routing information, and packet payload from being modified. Mechanisms include message authentication and encryption.

- Nonrepudiation Ensures that a sender cannot deny sending a message. Mechanisms include encryption, digital signatures, and notarization.

- Denial-of-service prevention

- Continuity of operations Ensures that the network is available even if attacked. Mechanisms include fault-tolerant and redundant systems and the capability to reconfigure network parameters in case of an emergency.

- Network management Monitors network performance and identifies attacks and failures. Mechanisms include components that enable network administrators to monitor and restrict resource access.

- Compromise protection

- Data confidentiality Protects data from being accessed in an unauthorized method during transmission. Mechanisms include access controls, encryption, and physical protection of cables.

- Traffic flow confidentiality Ensures that unauthorized entities are not aware of routing information or frequency of communication via traffic analysis. Mechanisms include padding messages, sending noise, or sending false messages.

- Selective routing Routes messages in a way to avoid specific threats. Mechanisms include network configuration and routing tables.

- The Information Technology Security Evaluation Criteria (ITSEC) was the first attempt at establishing a single standard for evaluating security attributes of computer systems and products by many European countries.

- ITSEC evaluates two main attributes of a system’s protection mechanisms: functionality and assurance.

- A difference between ITSEC and TCSEC is that TCSEC bundles functionality and assurance into one rating, whereas ITSEC evaluates these two attributes separately.

Penetration testing

Steps for vulnerability assessment

- Vulnerability scanning

- Analysis

- Communicate results

Penetration test strategies

• External testing

• Internal testing

• Blind testing

• Double-blind testing

Categories of penetration testing

• Zero knowledge